We’ve all heard the statistics:

85% of organizations will be “cloud first” by 2025.

58% of organizations said over half their workloads will be running in the cloud one year from now.

60% of organizational data is now in the cloud.

More and more organizations are choosing SaaS-based applications and a cloud-first IT strategy to modernize their technology stack. The intentions are good: lower costs (theoretically), gain resiliency, elasticity, flexible licensing, and much more. But with many different point solutions out there, this, unfortunately, has led to vendor overload, data silos, and a technical skills gap across the enterprise.

Rise of the Platform

As a solution to these challenges, organizations are adopting a unified enterprise data platform. Requirements for such a platform include:

Latest innovations in data and analytics capabilities

Supports multiple personas (IT, Data Engineers, Analysts, Data Scientists)

Integrates with their existing tech stack

Built-in governance to meet IT standards

Extensible with APIs and SDKs for customization

Marketplaces / Communities for exchanging content and ideas

Generative AI (now table stakes)

Meeting a few of these requirements would be good. Meeting most would be a win. Meeting all? Now we’re in unicorn territory.

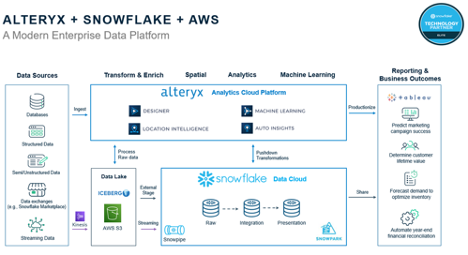

The Modern Enterprise Data Platform

Alteryx has partnered together with AWS and Snowflake to create an AI Platform for Enterprise Analytics that checks all the boxes. Leveraging the power of AWS and Snowflake, Alteryx Analytics Cloud automates data and analytic processing at scale to enable intelligent decisions across the enterprise.

The remainder of this blog will focus on how AWS, Snowflake, and the Alteryx AI Platform for Enterprise Analytics are integrated to provide a streamlined and unified enterprise data platform.

Alteryx Analytics Cloud Overview

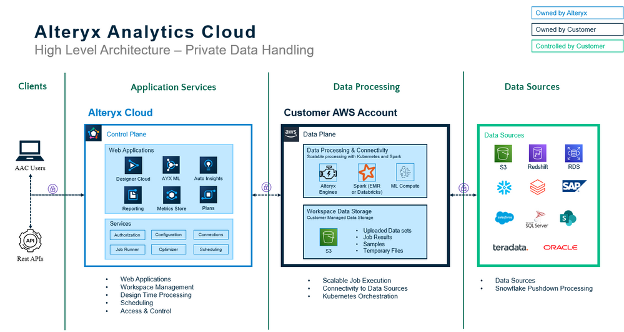

At a very high level, Alteryx Analytics Cloud provides a single unified platform for an organization to manage its data and analytics automation to drive intelligent decisions across the enterprise. Analytics Cloud provides applications for building data pipelines, performing geospatial analysis, building predictive models, and even automatically generating insightful dashboards. It does this in a cloud-first, elastic, and resilient environment and additionally provides all the robust operational controls to govern these processes to ensure consistent and predictable results.

Alteryx Analytics Cloud leverages a concept called Workspaces for organizations to segregate content, assets, data, reports, models, etc… based on requirements. Workspaces could be based on LOBs, project teams, departments, or other factors. Each Workspace has its own “Workspace Storage,” a dedicated storage environment for storing uploaded data files, sample data, and job results specific to that Workspace. By default, Alteryx provides and hosts a storage location for each Workspace. However, by using Private Data Storage, customers can use their own AWS S3 location for the Workspace Storage. This allows organizations greater flexibility in defining custom authorization assignments to specific folders as well as automated clean-up procedures to remove old files. But perhaps more importantly, it allows organizations to keep all those data assets stored in their own AWS cloud account.

Private Data Processing

Giving organizations even greater control over the way that their data processing jobs are handled, customers can elect to leverage “Private Data Processing” for job execution. With Private Data Processing, Alteryx can push the job execution duties to be processed within a customer-owned AWS VPC to ensure that all data connectivity and processing occurs within the customer’s network. To accomplish this, Alteryx leverages several AWS services, such as IAM roles & policies, EC2 instances, and an EKS cluster for scalable and elastic containerized job execution.

From RAW to Production

Snowflake’s Data Cloud Deployment Framework provides best practices for customers to consider as they build their data architecture strategy. As a foundation for this strategy, Snowflake recommends working with data through various stages, from the RAW ingestion layer to an integration layer where data modeling standards and business rules are applied to a final presentation layer consumed by reports and business applications. It is very common for the RAW layer to point to an EXTERNAL STAGE location where raw files are landed “as is.” This is commonly based on AWS S3 object storage due to its flexibility in supporting a variety of data types. Then Snowflake can ingest from S3 using various File Format and Copy Into options. With the Alteryx Analytics Cloud Platform, data engineers can define connections to AWS S3 and the Snowflake data layers and then build powerful ingestion and transformation pipelines to provide the desired data architecture. This can all be accomplished with self-service tooling to avoid complex File Format, Create, and Copy Into SQL statements.

Snowflake Pushdown Processing

An important aspect of a data engineering pipeline is where the jobs are processed. As mentioned in the Private Data Processing section, this can be configured for jobs to execute in the customer-owned AWS VPC. However, when all data assets are in Snowflake or the Private Data Storage (AWS S3) location, which is configured as an External Stage, then the Alteryx Analytics Cloud Platform will automatically “pushdown” the processing to occur in the Snowflake Warehouse defined by the connection. This avoids data movement and reduces job runtimes as they are processed directly in the powerful Snowflake warehouse.

Streaming

Many organizations are moving beyond traditional batch processing to adopt streaming use cases, and a successful enterprise data platform should be able to support both batch and streaming requirements. AWS has built powerful integrations for integrating streaming data through AWS Kinesis, which could easily be used to land data into an S3 Bucket. From that location, Snowflake Snowpipe Streaming could be used to land data into Snowflake directly. Additionally, Alteryx Analytics Cloud allows for event trigger-based scheduling, where you can configure Alteryx to watch an S3 Bucket for a specific object to be added or updated, and that “event” will trigger an action in Alteryx. This action could be the execution of a Designer Cloud workflow, a Designer Desktop workflow through Cloud Execution for Desktop, or even a more sophisticated Plan.

Snowpark Execution

An exciting new announcement from Snowflake is their Snowpark container execution of non-SQL workloads directly inside of Snowflake. Alteryx is proud to integrate this innovative new feature through Snowflake Execution for Desktop. With this new capability, Alteryx Designer users will be able to save desktop-built Workflows to the Alteryx Analytics Cloud Platform and execute them directly in Snowflake utilizing a Snowpark Container execution service.

Further Reading

For more information on Alteryx Analytics Cloud, these resources are a great place to get started:

The Alteryx AI Platform for Analytics