Generative AI has quickly become an undisputed game changer in business. 82% of business leaders agree that AI is significantly impacting organizational goals, and 46% of board members have stated that generative AI is their top priority over everything else.

We have moved beyond the stage of advocating for the adoption of generative AI; the focus is now on how businesses will use it. With genAI now reaching the peak of the hype cycle, IT teams are realizing that there is one clear differentiating element that can make or break a generative AI project: data.

Data is one of the most significant pieces to a successful generative AI program; teams need access to high-quality, governed, and reliable data to run tests, experiment, and explore results.

Are IT teams and their data stacks ready? Are their systems, technologies, and business cultures set up for the massive shift that is generative AI?

We interviewed 3,100 global IT leaders about their data stacks, organizational structure, and approaches to data strategy. Here’s what we learned.

Businesses are confident in their data. Should they be?

Figure 1 – What level of trust do you have in your organization’s data?

Throughout the survey responses, one theme emerged: overall, IT leaders are satisfied with their data. Over half of companies (54%) rated their data maturity as good or advanced, and 76% trust their data.

This is good news, right?

Turns out there are some cracks in the facade.

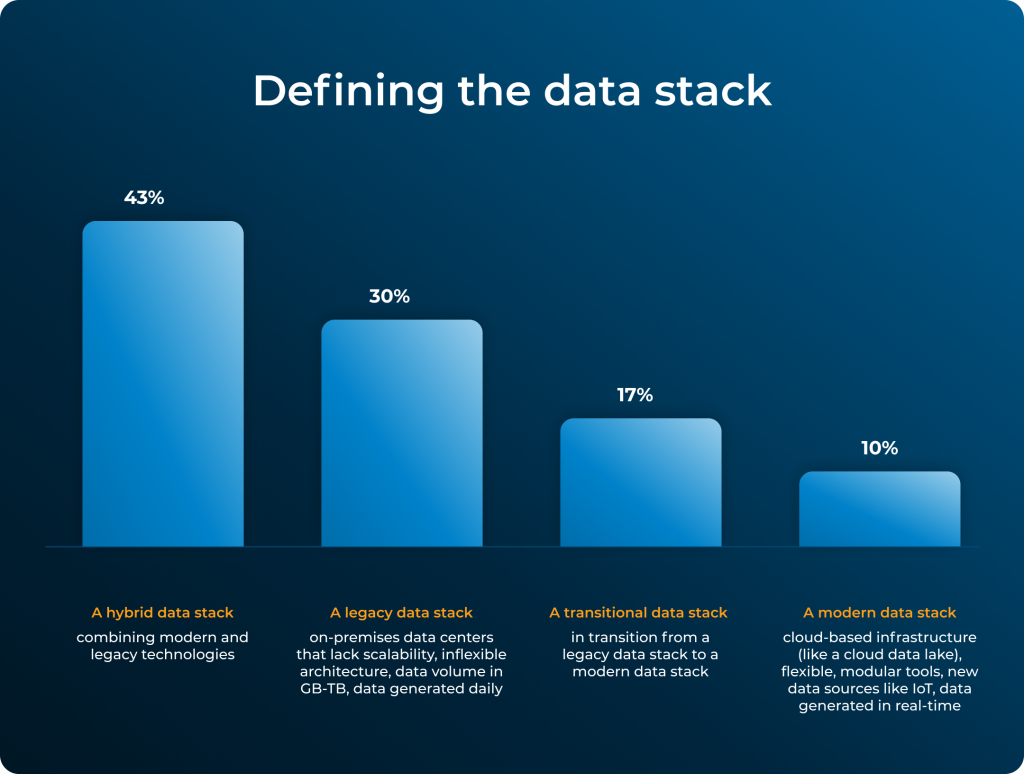

While the sentiment is true that leaders feel confident in their data, there is some evidence to the contrary when we do a little digging. Only 10% of businesses surveyed state they have a “modern” data stack – almost half (47%) are updating their data stack infrastructure to make it more modern. One in five companies (22%) faces data bias challenges, and 20% need help with data quality — and while this doesn’t represent the majority, it’s enough to raise some questions.

How does this explain the confidence that leaders are feeling? Addressing data quality and bias could be the key to getting the other 24% into the same positive position. In fact, you’ll see below that IT leaders ranked data quality as their top goal for new technology investments. The secret to success in a genAI-augmented world will be doubling down on efforts to improve or maintain data quality and infrastructure throughout the disruption.

Is it time for the death of the spreadsheet?

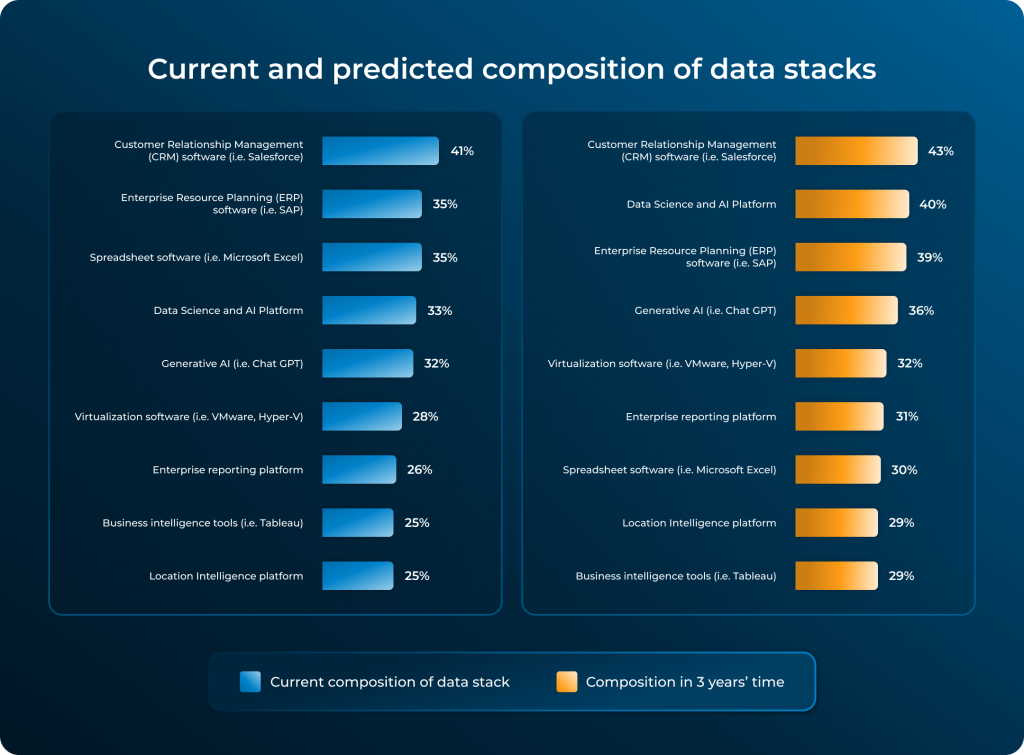

Figure 2 – What is the composition of your data stack currently? And what do you expect your data technology stack to look like in 3 years’ time?

Is now finally the time for the spreadsheet to bite the dust? We asked IT leaders what technologies make up their current data stacks, and it’s what you would expect: the top three were CRM (Customer Relationship Management) software, ERP (Enterprise Resource Planning) software, and spreadsheets.

When we asked about the future makeup of the data stack, we saw some shifts. IT leaders expected the top three elements of the data stack in three years to be CRM software, data science and AI platforms, and ERP software. Spreadsheets dropped from 3rd in the stack to 7th.

What’s driving this? Is the spreadsheet on its way out?

The increase in data science and AI platforms from 4th to 2nd position in the future data stack, along with generative AI’s close 4th position, could partially explain this. IT leaders likely hope these more advanced platforms will allow companies to automate many tedious, manual aspects of managing data — and, perhaps even more significantly, make sophisticated modeling and predictive capabilities more accessible.

So, does this mean the end of the spreadsheet? Our data indicates no. Even after falling from 3rd to 7th place in the data stack, spreadsheets are still expected to make up 31% of the data stack in 3 years. So, it turns out that reports of the death of the spreadsheet are greatly exaggerated. However, it may be true that teams will rely less on spreadsheets for sophisticated data integration, manipulation, and analysis while other technologies step in.

How are data stacks built, anyway?

Figure 3 – Would you classify your organization’s data stack as:

How a data stack is built says a lot about a company’s data strategy; a business with a data stack in the cloud will approach its strategies differently than a company managing and maintaining legacy and on-prem systems.

So, what is driving how a data stack is built? It’s a bit of a chicken and the egg scenario – does strategy build the stack, or does the stack drive the strategy?

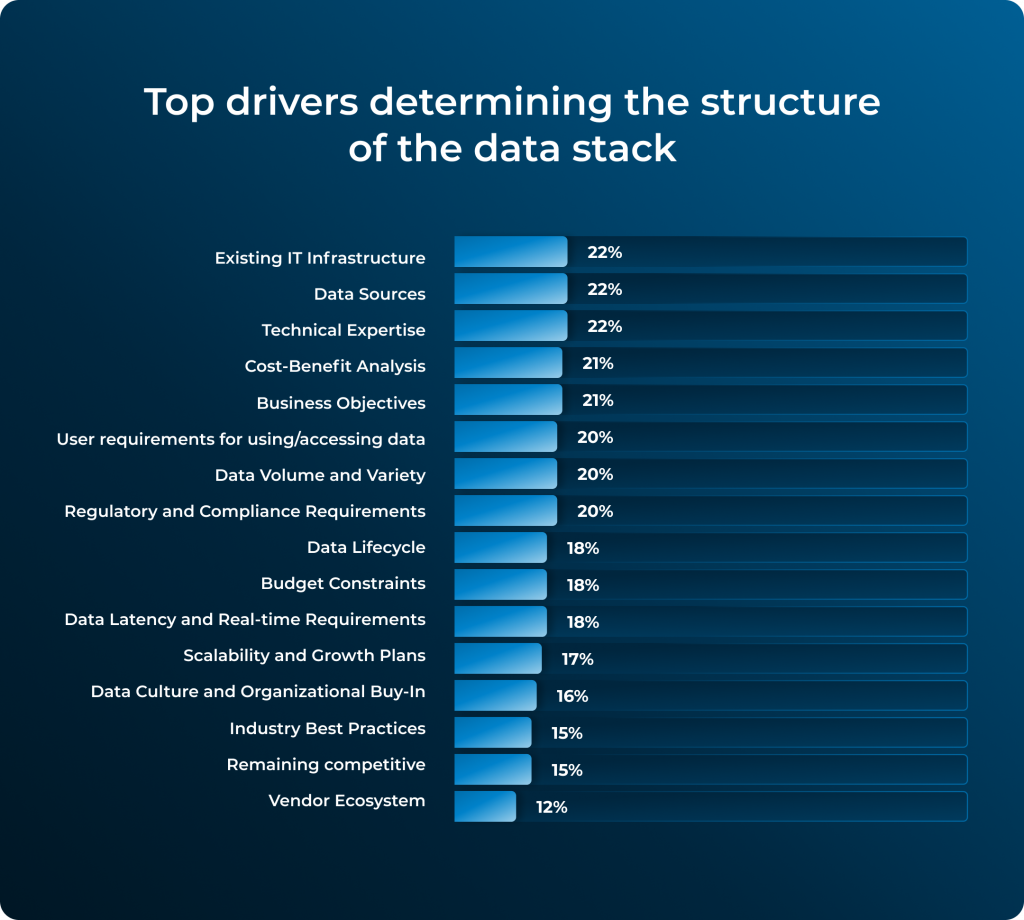

Figure 4 – What are the top 3 drivers determining the structure of your organization’s data stack?

According to our data, the top three drivers of a data stack structure are:

- Existing IT infrastructure (22%)

- Data sources (22%)

- Technical expertise (22%)

Interestingly, these three factors outweigh business objectives, which came slightly behind at 21%.

This explains why so many companies have hybrid data stacks; not surprisingly, in the current economic climate, leaders feel that they must work with the infrastructure investments they already have and build strategy from there.

What about new technology investments? The top factors for net-new technology are:

- Cost (32%)

- Ease of use (29%)

- Security and compliance credentials (27%)

To add another dimension of information to this picture, we asked IT leaders what they were looking for in their new technology investments – the top response was improving data quality (23%).

Are data teams set up for AI failure?

Outside of the technology powering data stacks, how data teams are managed and organized, and the operating procedures around data, will significantly affect businesses’ ability to adopt and adapt to generative AI. We asked several questions about how data teams are run, and the results point to a few scenarios that may hinder generative AI adoption.

Who owns data?

When asked who owns data within organizations, the majority responded that it was the CDO (22%), though the results varied widely and didn’t show a consensus across respondents regarding data ownership.

For 11% of companies, the board of directors was the ultimate owner of the data; for 8% of businesses, senior executives owned the data.

While there’s no correct answer for who should own data, the lack of consensus may be concerning when data access and management are requirements for a successful generative AI implementation.

Who owns the budget?

IT teams, in general, are responsible for data technology budgets, but the reality of how those budgets are allocated and adjusted tells a story that may have made generative AI adoption difficult over the last year.

Over half of businesses state that budgets are not reviewed or adjusted throughout the year, even if new needs arise. Fifty-four percent (54%) state that if other priorities, projects, or spending needs arise after budgets are allocated, they cannot be adjusted. This must have been a considerable challenge in 2023, when the pressure to adopt generative AI grew exponentially.

Considering how quickly generative AI moved in the last year and how quickly it continues to change, some updates to how IT budgets are allocated or reviewed may need to be revised to allow for innovation.

How are data teams structured?

Two-fifths of companies (41%) do not have a centralized data or analytics function that maintains data as a shared resource for the business; instead, individual departments manage their data. When addressing generative AI, this may not be an issue, except that these departments don’t seem to be sharing this data across the company; 48% of respondents stated that data is kept within the department that generates the data.

This further emphasizes that data has no clear owner and is isolated in silos. To fully see the impact of generative AI, the more (clean, accessible, governed) data, the better. It will be interesting to see if this approach changes as generative AI adoption moves across the business.

What is next for IT teams and genAI?

We are still only in the early stages of seeing the impact of generative AI on companies. It will be interesting to see how the fundamental elements of data teams in the enterprise shift, whether it’s how data stacks are managed, what tools are prioritized, or how data teams are structured.

To dig into the full results of the research, download the report, The Data Stack Evolution: Legacy Challenges and AI Opportunities, here.